TCP/IP Model: Transmission Control Protocol

To properly learn something, we have to start at the beginning. We will be learning one concept at a time, process it, and move to the next.

The goal is consistent learning and absorbing information while feeling engaged and not overwhelmed.

I have divided the transport layer articles into three parts.

- Introduction to Transport layer

- UDP in Transport layer

- TCP in Transport layer

Goal of this article

I will be discussing the transmission control protocol in the transport layer of the TCP/IP Five-layer network model.

Transmission Control Protocol

-

TCP (Transmission Control Protocol) provides a reliable, connection-oriented service to the invoking application.

-

TCP extends IP’s delivery service between two end systems to a delivery service between two processes running on the end systems.

-

TCP also provides integrity checking by including error-detection fields in their segments’ headers.

-

TCP provides reliable data transfer and congestion control.

Connection-Oriented Multiplexing and Demultiplexing

To understand TCP demultiplexing, we have to examine TCP sockets and TCP connection establishment closely.

-

TCP socket is identified by a four-tuple: (source IP address, source port number, destination IP address, destination port number). When a TCP segment arrives from the network to a host, the host uses all four values to direct (demultiplex) the segment to the appropriate socket.

-

Two arriving TCP segments with different source IP addresses or source port numbers will (except for a TCP segment carrying the original connection- establishment request) be directed to two different sockets.

The TCP Connection

-

TCP is said to be connection-oriented because before one application process can begin to send data to another, the two processes must first “handshake” with each other—that is, they must send some preliminary segments to each other to establish the parameters of the ensuing data transfer. This connection establishment procedure is often referred to as a three-way handshake.

-

A TCP connection provides a full-duplex service: If there is a TCP connection between Process A on one host and Process B on another host, then application-layer data can flow from Process A to Process B at the same time as application-layer data flows from Process B to Process A.

-

A TCP connection is always point-to-point, that is, between a single sender and a single receiver.

-

A TCP connection consists of buffers, variables, socket connections to a process in one host, and another set of buffers, variables, and a socket connection to a process in another host.

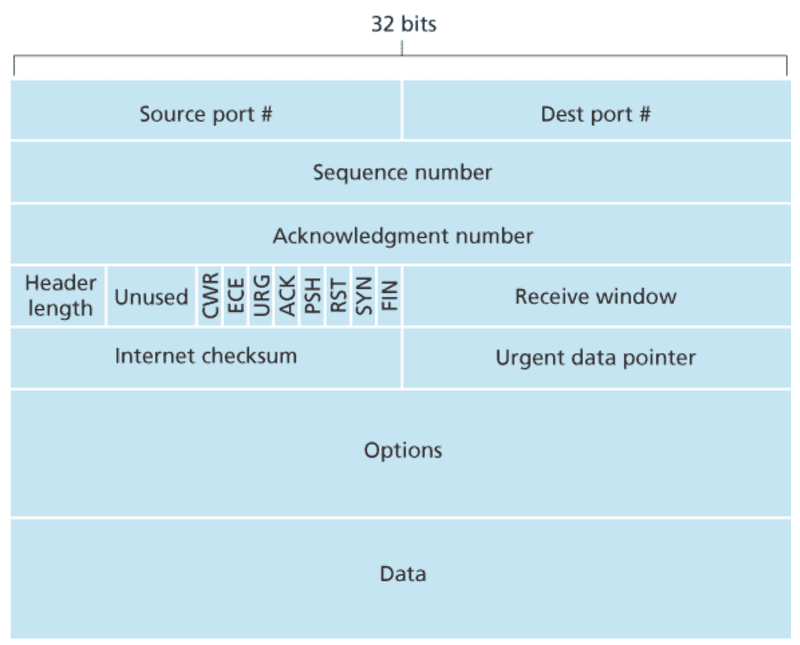

TCP Segment Structure

The TCP segment consists of header fields and a data field. The data field contains a chunk of application data.

As with UDP, the header includes source and destination port numbers, used for multiplexing/demultiplexing data from/to upper-layer applications. Also, as with UDP, the header includes a checksum field.

A TCP segment header also contains the following fields:

-

Sequence number and Acknowledgment number

- This 32-bit field is used by the TCP sender and receiver in implementing a reliable data transfer service.

- TCP views data as an unstructured but ordered stream of bytes. TCP’s use of sequence numbers reflects this view in that sequence numbers are over the stream of transmitted bytes and not over the series of transmitted segments. Therefore, the sequence number for a segment is the byte-stream number of the first byte in the segment.

- TCP only acknowledges bytes up to the first missing byte in the stream. TCP is said to provide cumulative acknowledgments.

- Both sides of a TCP connection randomly choose an initial sequence number. Randomization is done to minimize the possibility that a segment that is still present in the network from an earlier, already-terminated connection between two hosts is mistaken for a valid segment in a later connection between these same two hosts (which also happen to be using the same port numbers as the old connection).

-

Receive window

- The 16-bit receive window field is used for flow control.

- It is used to indicate the number of bytes that a receiver is willing to accept.

-

Header length

- The 4-bit header length field specifies the length of the TCP header in 32-bit words.

- The TCP header can be of variable length due to the TCP options field.

- Typically, the options field is empty, so the length of the typical TCP header is 20 bytes.)

-

Options

- The optional and variable-length options field is used when a sender and receiver negotiate the maximum segment size (MSS) or as a window scaling factor for use in high-speed networks.

-

Flag

- The flag field contains 6 bits.

- The ACK bit is used to indicate that the value carried in the acknowledgment field is valid. That is, the segment contains an acknowledgment for a segment that has been successfully received.

- SYN and FIN bits are used for connection setup and teardown.

- The CWR and ECE bits are used in explicit congestion notification.

- Enabling the PSH bit indicates that the receiver should immediately pass the data to the upper layer.

- The URG bit is used to indicate that there is data in this segment that the

sending-side upper-layer entity has marked as urgent.

- Urgent data pointer

- The location of the last byte of the urgent data is indicated by the 16-bit urgent data pointer field.

TCP and Reliable data transfer

TCP creates a reliable data transfer service on top of IP’s unreliable best-effort service.

TCP’s reliable data transfer service ensures that the data stream that a process reads out of its TCP receive buffer is uncorrupted, without gaps, without duplication, and in sequence; that is, the byte stream is the same byte stream that was sent by the end system on the other side of the connection.

A simplified description of TCP sender that uses only timeouts to recover from lost segments

There are three significant events related to data transmission and retransmission in the TCP sender.

-

Data received from the application

- TCP receives data from the application, encapsulates the data in a segment, and passes the segment to IP. Note that each segment includes a sequence number that is the byte-stream number of the first data byte in the segment. If the timer is already not running for some other segment, TCP starts the timer when the segment is passed to IP.

-

Timer timeout

- The second significant event is the timeout. TCP responds to the timeout event by retransmitting the segment that caused the timeout. TCP then restarts the timer.

-

ACK receipt

- The third major event that the TCP sender must handle is the arrival of an acknowledgment segment (ACK) from the receiver (more specifically, a segment containing a valid ACK field value)

We now discuss a few modifications that most TCP implementations employ.

Doubling the Timeout Interval

The first concerns the length of the timeout interval after a timer expiration.

- In this modification, TCP retransmits the not-yet-acknowledged segment with the smallest sequence number whenever the timeout event occurs. But each time TCP retransmits, it sets the next timeout interval to twice the previous value.

- The timer expiration is most likely caused by congestion in the network, that is, too many packets arriving at one (or more) router queues in the path between the source and destination, causing packets to be dropped or long queuing delays.

- This modification provides a limited form of congestion control.

- TCP acts politely in times of congestion, with each sender retransmitting after longer and longer intervals.

Fast Retransmit

One of the problems with timeout-triggered retransmissions is that the timeout period can be relatively long.

-

When a segment is lost, this long timeout period forces the sender to delay resending the lost packet, thereby increasing the end-to-end delay. Fortunately, the sender can often detect packet loss well before the timeout event occurs by noting so-called duplicate ACKs.

-

A duplicate ACK is an ACK that reacknowledges a segment for which the sender has already received an earlier acknowledgment.

When a TCP receiver receives a segment with a more significant sequence number than the following, expected, in-order sequence number, it detects a gap in the data stream—that is, a missing segment. It simply reacknowledges (that is, generates a duplicate ACK for) the last in-order byte of data it has received.

Flow Control in TCP

TCP provides a flow-control service to its applications to eliminate the possibility of the sender overflowing the receiver’s buffer.

-

Flow control is thus a speed-matching service—matching the rate at which the sender is sending against the rate at which the receiving application is reading.

-

TCP provides flow control by having the sender maintain a variable called the receive window.

-

The receive window is used to give the sender an idea of how much free buffer space is available at the receiver. Because TCP is full-duplex, the sender at each side of the connection maintains a separate receive window.

TCP Connection Management

TCP Connection Management is important since TCP connection establishment can significantly add to perceived delays.

Suppose a process running in one host (client) wants to connect with another process in another host (server). The client application process first informs the client TCP that it wants to establish a connection to a process in the server. The TCP in the client then proceeds to establish a TCP connection with the TCP in the server in the following manner:

Step 1

- The client-side TCP first sends a particular TCP segment to the server-side TCP.

- This special segment contains no application-layer data. But the SYN bit of the flag bits in the segment’s header is set to 1. For this reason, this particular segment is referred to as an SYN segment.

- In addition, the client randomly chooses an initial sequence number (

client_isn) and puts this number in the sequence number field of the initial TCP SYN segment. - This segment is encapsulated within an IP datagram and sent to the server.

- There has been considerable interest in properly randomizing the choice of the

client_isnto avoid specific security attacks.

Step 2

Once the IP datagram containing the TCP SYN segment arrives at the server host, the server extracts the TCP SYN segment from the datagram, allocates the TCP buffers and variables to the connection, and sends a connection-granted segment to the client TCP.

- This connection-granted segment also contains no application-layer data. It does contain three crucial pieces of information in the segment header.

- the SYN bit is set to 1.

- the acknowledgment field of the TCP segment header is set to

client_isn + 1. - the server chooses its initial sequence number (

server_isn) and puts this value in the sequence number field of the TCP segment header.

- This connection-granted segment is saying, in effect, “I received your SYN packet to start a connection with your initial sequence number (

client_isn). I agree to establish this connection. My initial sequence number isserver_isn.” - The connection-granted segment is referred to as an SYNACK segment.

Step 3

- Upon receiving the SYNACK segment, the client also allocates buffers and variables to the connection.

- The client host then sends the server yet another segment. This last segment

acknowledges the server’s connection-granted segment (the client put the value server_isn + 1 in the acknowledgment field of the TCP segment header).

- The SYN bit is set to zero since the connection is established. This third stage of the three-way handshake may carry client-to-server data in the segment payload.

TCP Congestion Control

TCP provides a reliable transport service between two processes running on different hosts. Another critical component of TCP is its congestion-control mechanism.

The approach taken by TCP is to have each sender limit the rate at which it sends traffic into its connection as a function of perceived network congestion. If a TCP sender perceives little congestion on the path between itself and the destination, then the TCP sender increases its send rate. If the sender perceives congestion along the path, then the sender reduces its send rate.

Now, this approach raises three questions.

How does a TCP sender limit the rate at which it sends traffic into its connection?

-

A TCP connection consists of a receive buffer, a send buffer, and several variables (

LastByteRead,rwnd,and so on). The TCP congestion-control mechanism operating at the sender keeps track of an additional variable, the congestion window. -

The congestion window (cwnd) imposes a constraint on the rate at which a TCP sender can send traffic into the network. Specifically, the amount of unacknowledged data at a sender may not exceed the minimum congestion window and receive window.

-

Consider a connection for which loss and packet transmission delays are negligible. Then, roughly, at the beginning of every RTT, the constraint permits the sender to send cwnd bytes of data into the connection. At the end of the RTT, the sender receives acknowledgments for the data. Thus the sender’s send rate is roughly cwnd/RTT bytes/sec. By adjusting the value of cwnd, the sender can adjust the rate at which it sends data into its connection.

How does a TCP sender perceive that there is congestion on the path between itself and the destination?

Let us define a “loss event” at a TCP sender as the occurrence of either a timeout or the receipt of three duplicate ACKs from the receiver.

When there is excessive congestion, then one (or more) router buffers along the path overflows, causing a datagram (containing a TCP segment) to be dropped. The dropped datagram results in a loss event at the sender, either a timeout or the receipt of three duplicate ACKs, which the sender takes to indicate congestion.

What algorithm should the sender use to change its send rate as a function of perceived end-to-end congestion?

-

TCP takes the arrival of acknowledgments for previously unacknowledged segments to indicate that all is well that segments being transmitted into the network are being successfully delivered to the destination.

-

TCP uses acknowledgments to increase its congestion window size (and hence its transmission rate).

-

If acknowledgments arrive at a relatively slow rate (e.g., if the end-end path has a high delay or contains a low-bandwidth link), then the congestion window is increased at a relatively slow rate.

-

If acknowledgments arrive at a high rate, then the congestion window is increased more quickly.

-

TCP is said self-clocking because TCP uses acknowledgments to trigger (or clock) its increase in congestion window size.

How the TCP senders determine their sending rates such that they don’t congest the network but simultaneously make use of all the available bandwidth?

-

A lost segment implies congestion, and hence, the TCP sender’s rate should be decreased when a segment is lost.

-

An acknowledged segment indicates that the network is delivering the sender’s segments to the receiver, and hence, the sender’s rate can be increased when an ACK arrives for a previously unacknowledged segment.

-

Bandwidth probing

- TCP’s strategy for adjusting its transmission rate is to increase its rate in response to arriving ACKs until a loss event occurs, at which point the transmission rate is decreased.

- The TCP sender increases its transmission rate to probe for the rate at which congestion onset begins, backs off from that rate, and then begins probing again to see if the congestion onset rate has changed.

- There is no explicit signaling of congestion state by the network—ACKs and loss events serve as implicit signals—and that each TCP sender acts on local information asynchronously from other TCP senders.

TCP Congestion-Control Algorithm

The algorithm has three major components.

- Slow start

- Congestion avoidance

- Fast recovery

Slow start and congestion avoidance are mandatory components of TCP, differing in how they increase the size of cwnd in response to received ACKs.

Slow Start

When a TCP connection begins, the value of cwnd is typically initialized to a small value of 1 MSS(maximum-sized segment), resulting in an initial sending rate of roughly MSS/RTT.

In the slow-start state, the value of cwnd begins at 1 MSS and increases by 1 MSS every time a transmitted segment is first acknowledged.

- If there is a loss event (i.e., congestion) indicated by a timeout, the TCP sender sets the value of cwnd to 1 and begins the slow start process a new. It also sets the value of a slow start threshold to half of the value of the congestion window value when congestion is detected.

- When the value of

cwndequalsssthresh,slow start ends, and TCP transitions into congestion avoidance mode. - If three duplicate ACKs are detected, TCP performs a fast retransmit and enters the fast recovery state.

Congestion Avoidance

On entry to the congestion avoidance state, the value of cwnd is approximately half its value when congestion was last encountered. Congestion could be just around the corner.

Instead of doubling the value of cwnd every RTT, TCP adopts a more conservative approach and increases the value of cwnd by just a single MSS every RTT.

Fast Recovery

In fast recovery, the value of cwnd increases by 1 MSS for every duplicate ACK received for the missing segment that caused TCP to enter the fast-recovery state.

Eventually, when an ACK arrives for the missing segment, TCP enters the congestion-avoidance state after deflating cwnd.

If a timeout event occurs, fast recovery transitions to the slow-start state after performing the same actions as in the slow start and congestion avoidance: The value of cwnd is set to 1 MSS, and the value of ssthresh is set to half the value of cwnd when the loss event occurred.

Did you find this post helpful?

I would be grateful if you let me know by sharing it on Twitter!

Follow me @ParthS0007 for more tech and blogging content :)

Newsletter

If you liked this post, sign up to get updates in your email when I write something new! No spam ever.

Subscribe to the Newsletter